The phrase “content is king” has been around for a long time, but 2011 was the year Google put a crown on its head. Before the Panda update, it was possible to rank with thin, unhelpful pages as long as you checked the right technical boxes. That all changed when the algorithm started to evaluate the actual quality of a site’s content. Suddenly, creating genuinely useful, original, and well-researched articles wasn’t just good practice; it was a requirement for survival. This shift forced a move away from manipulative tactics and toward a user-first approach that remains the core of any sustainable SEO strategy.

Key Takeaways

- Focus on Content That Helps People: The 2011 updates made it clear that SEO success depends on creating valuable, original content that answers a user’s questions, not just pages filled with keywords.

- Earn Links, Don’t Just Build Them: The value of a link shifted from quantity to quality. A successful strategy involves creating content so useful that other reputable sites want to link to it naturally.

- Technical SEO is the Foundation for a Good User Experience: Factors like site speed, mobile-friendliness, and a clear site structure became essential because they directly affect how a user interacts with your site, which is a key quality signal for search engines.

How Google’s Algorithm Shifted in 2011

The year 2011 marked a turning point for search engine optimization. Before this, SEO was often a game of technical tricks and quantity over quality. Google changed the rules with updates that fundamentally reshaped how websites are ranked, shifting the focus from simply matching keywords to providing a valuable user experience. This was the year that content truly became king, forcing SEO professionals to adapt their strategies to prioritize substance and relevance. Understanding these changes is key to grasping the principles that still guide effective SEO.

Understanding the Panda Update

Introduced in 2011, the Google Panda update was a direct response to low-quality websites. Its goal was to reduce the rankings of “content farms”—sites that produced large volumes of thin or duplicate content just to capture keyword traffic. Before Panda, these sites often cluttered search results, pushing down websites with more valuable information. The update used an algorithm to assess content quality across a site. As a result, sites with original, helpful articles saw their rankings improve, while those relying on spammy tactics were penalized, forcing a major industry-wide cleanup.

Why Content Quality Became a Ranking Factor

The Panda update wasn’t just about penalizing bad actors; it was about rewarding good ones. By making content quality a significant ranking factor, Google signaled that the best way to succeed in search is to create content that genuinely helps people. High-quality content underpins a successful online strategy, serving as the foundation for everything from search rankings to audience engagement. This shift forced marketers to think like publishers, focusing on creating articles and resources that were well-written, accurate, and comprehensive. The era of keyword stuffing was over, replaced by a new standard where value determined visibility.

The New Emphasis on User Experience

Ultimately, the changes in 2011 were driven by a renewed focus on the user. Google’s business depends on providing relevant and helpful search results, and low-quality content creates a poor user experience. The algorithm updates were designed to better align search rankings with what users find valuable. By prioritizing sites with strong content, Google could ensure that people clicking through from a search result would land on a page that answered their questions. This emphasis on user satisfaction laid the groundwork for future updates focused on signals like site speed and mobile-friendliness, solidifying that good SEO is synonymous with a great user experience.

Putting Quality Content First

The Panda update in 2011 marked a significant turning point for SEO, shifting the focus from technical tricks to the actual substance of a website’s content. Before this, it was common to see low-quality pages ranking highly simply because they were optimized for search engine crawlers, not for human readers. Panda changed the rules by evaluating the quality of a site’s content and penalizing those with thin, duplicate, or unhelpful pages. This was Google’s way of saying that the user experience matters most.

This update forced marketers and site owners to ask critical questions about their content strategy. Is this content genuinely useful to my audience? Is it original and well-researched? Does it provide real value? Suddenly, content wasn’t just a vehicle for keywords; it was the core of a successful SEO strategy. This principle remains true today. Creating high-quality, user-focused content is the most sustainable way to achieve and maintain high search rankings. Modern SEO tools can help generate and optimize this content, but the underlying goal is always to serve the reader first.

Moving Past Keyword Stuffing

Before 2011, keyword stuffing was a common and often effective tactic. This involved loading a webpage with a target keyword over and over again in an attempt to signal its relevance to search engines. The text often sounded unnatural and was difficult to read, but it worked because early algorithms relied heavily on keyword frequency as a primary ranking signal.

The Panda update was designed to identify and penalize sites that relied on such manipulative practices. Google’s algorithms became more sophisticated, learning to understand context and semantic relevance. As a result, the focus shifted from keyword density to creating content that used keywords naturally within a helpful, comprehensive discussion of a topic. This change forced a move toward a more user-centric approach, where the goal is to answer a user’s query thoroughly rather than just repeating a term.

Prioritizing Content Depth and Value

With Panda, Google began to actively demote sites with “thin” or low-quality content. Thin content refers to pages that offer little to no real value to the user. This could include pages with very little text, auto-generated content, or articles that simply rehash information found elsewhere without adding original insight. The update aimed to reward websites that invested in creating original, in-depth, and valuable resources.

This meant that simply having a blog was no longer enough. The content on that blog had to be good. Websites with well-researched articles, detailed guides, and unique perspectives started to see their rankings improve. This principle has only become more important over time. Search engines now favor content that demonstrates expertise and trustworthiness. Tools that help identify content gaps and add new, relevant information to existing articles are essential for maintaining this depth and value.

Measuring User Engagement

While Google has never explicitly confirmed all the signals it uses, the 2011 updates made it clear that user engagement was becoming a critical measure of content quality. When a user clicks on a search result, stays on the page to read the content, and doesn’t immediately return to the search results—a behavior known as “pogo-sticking”—it signals to Google that the page was a good answer to their query.

Metrics like time on page, bounce rate, and click-through rate (CTR) became indirect indicators of content quality. If your content is valuable and engaging, people will stick around to consume it. This user behavior data helps Google refine its rankings, promoting pages that users find genuinely helpful. Focusing on a positive user experience and creating content that holds a reader’s attention became an essential part of on-page SEO.

How Content Freshness Became a Factor

Alongside Panda, Google also rolled out an update informally known as “Query Deserves Freshness” (QDF). This algorithm change recognized that for certain types of searches, users expect the most current information. For topics like breaking news, recurring events, or trending product reviews, newer content was given a ranking advantage. This didn’t apply to all searches—evergreen topics like “how to tie a tie” don’t require constant updates—but it introduced the concept of content freshness as a ranking factor.

This update encouraged creators to not only publish new content but also to regularly review and update their existing articles. Keeping content current and accurate became another way to signal quality and relevance to both users and search engines. Regularly updating articles with new information, statistics, or insights helps maintain their value over time, ensuring they continue to perform well in search results.

Mastering Technical SEO Essentials

Beyond content and links, 2011 was a year that solidified the importance of a strong technical foundation. Think of it as the framework of your house; without it, even the best interior design won’t hold up. Google’s algorithm began to more heavily weigh factors that influenced how easily its crawlers could access, understand, and index your site. This meant that the behind-the-scenes work became just as critical as the content users saw.

Getting the technical details right was about speaking Google’s language. It involved optimizing everything from site speed and URL structure to how you handled moved or deleted pages. These weren’t just one-off fixes. They were fundamental practices that created a better experience for both search engines and human visitors, setting the stage for long-term ranking success. For businesses looking to compete, mastering these essentials was no longer optional. This shift required marketers and developers to work more closely together, ensuring that websites were not only filled with great content but were also built on a solid, search-friendly architecture.

Optimizing for Site Speed

Even back in 2011, speed mattered. Google had already started indicating that site speed was a ranking signal, connecting faster load times with a better user experience. The logic was simple: pages that loaded quickly kept users engaged, leading to lower bounce rates and more time spent on site. A slow website, on the other hand, created friction and frustrated visitors, who would often leave before the content even appeared. This shift pushed webmasters to start optimizing images, leveraging browser caching, and minimizing code to shave precious seconds off their load times. It was one of the first clear signals that technical performance directly impacted search visibility.

Creating a Mobile-Friendly Design

The rise of the smartphone was well underway by 2011, and search behavior was changing with it. While Google’s official shift to mobile-first indexing was still years away, the groundwork was being laid. It was becoming clear that a desktop site simply shrunk down to fit a small screen wasn’t good enough. Users needed readable text, tappable buttons, and a design that didn’t require constant pinching and zooming. Websites that offered a dedicated mobile version or an early responsive design provided a superior experience for a growing segment of searchers. This focus on mobile usability was an early indicator of where the web was heading.

Structuring URLs and Site Architecture

A logical site structure and clean URLs became key components of technical SEO. A well-organized website helps search engines understand the hierarchy of your content and how different pages relate to one another. This was complemented by creating a clear SEO-Friendly URL Structure that was easy for both users and crawlers to read. Instead of long strings of numbers and characters, best practices called for short, descriptive URLs that included relevant keywords. This not only helped search engines grasp the page’s topic but also made links more trustworthy and shareable for users, improving the overall experience.

Developing an Internal Linking Strategy

Internal links act as the pathways of your website, guiding both users and search engine crawlers from one page to another. In 2011, a thoughtful internal linking strategy was crucial for distributing page authority and establishing a clear content hierarchy. By linking from high-authority pages to other relevant pages on your site, you could pass some of that link equity along, helping newer or deeper pages get discovered and rank. For users, these links provided a helpful path to related content, encouraging them to explore more of your site and increasing their time on page. It was a simple yet powerful way to improve both SEO and user navigation.

Using 301 Redirects Correctly

As websites evolve, content inevitably moves or gets deleted. Handling these changes correctly was essential for preserving your SEO value. The 301 redirect became the standard tool for this, signaling to search engines that a page had permanently moved to a new location. Implementing a 301 redirect ensured that any link equity or authority from the old URL was passed on to the new one. This prevented broken links, which create a poor user experience, and stopped you from losing the hard-earned value of your backlinks. Proper redirect management was a fundamental part of website maintenance that protected your rankings during site updates or redesigns.

Rethinking Link Building and Social Signals

The year 2011 marked a major turning point for off-page SEO. The old playbook of accumulating as many links as possible, regardless of their source, was officially obsolete. Google’s Panda update forced a massive industry-wide shift toward quality and relevance. At the same time, the explosive growth of social media introduced a new and powerful set of signals that search engines couldn’t ignore. This new landscape required a complete overhaul of link-building strategies, moving from artificial tactics to a more holistic approach centered on earning authority and creating genuine value.

This period forced marketers to rethink the very definition of a “link.” It was no longer just a hyperlink; it was a vote of confidence from one site to another. Social shares, while not direct links, became a similar type of endorsement, signaling to search engines that content was resonating with a live audience. The strategies that emerged from this era—focusing on high-quality content, earning links naturally, and building a diverse backlink profile—became the bedrock of modern SEO. While the tactics have evolved, these foundational principles remain. Today, tools can help automate the process of identifying opportunities and managing outreach, but the core goal of earning trust and authority is unchanged.

How Social Media Influenced Rankings

Before 2011, social media was often treated as a separate marketing channel, disconnected from SEO. But that changed as Google began to take social signals more seriously. While the exact weight of likes, shares, and tweets in the ranking algorithm was a topic of debate, a strong correlation emerged: pages with high social engagement often ranked better. This wasn’t necessarily because a “like” was a direct ranking factor. Instead, social media acted as a powerful catalyst. Content that went viral on platforms like Facebook and Twitter naturally attracted more eyeballs, which in turn led to more organic backlinks from bloggers and journalists who discovered the content through social channels. These social signals were seen as a real-time measure of a content piece’s relevance and popularity, aligning perfectly with Google’s mission to deliver high-quality search results.

Using Social Media to Build Authority

Beyond just generating signals for specific pages, social media became an essential tool for building overall brand authority. Consistently sharing valuable content, engaging with followers, and participating in industry conversations helped businesses establish themselves as trusted voices. This brand-building effort had a direct impact on SEO. As a company’s reputation grew on social platforms, so did its likelihood of earning natural mentions and high-quality backlinks from other authoritative sources. By regularly updating content and using social media as a primary distribution channel, brands could create a positive feedback loop where social authority and search authority reinforced each other, leading to sustained growth in rankings.

Focusing on Link Quality, Not Quantity

The Panda update was a wake-up call for anyone still relying on outdated link-building tactics. The era of buying links from spammy directories and participating in link farms was over. Google made it clear that the quality of a link mattered far more than the sheer quantity. The update specifically targeted sites with low-quality content and manipulative link profiles, causing many to lose significant traffic overnight. This change underscored Google’s commitment to rewarding websites that provide genuine value to users. One single, editorially given link from a respected, relevant website became exponentially more valuable than hundreds of links from low-authority domains. This forced SEOs to stop asking, “How can I get more links?” and start asking, “How can I earn links from the right places?”

Acquiring Links Naturally

With the focus shifted to quality, the best way to build links was to stop “building” them altogether and start “earning” them. This meant creating content so valuable, interesting, or useful that other people would want to link to it organically. Content marketing became a cornerstone of modern SEO. Strategies revolved around producing link-worthy assets like original research, in-depth guides, compelling infographics, and free tools. The goal was to create something that served as a primary resource on a topic. When you publish exceptional content, links become a natural byproduct of your marketing efforts rather than the result of manual outreach. This approach aligned with how search engines index and rank content, rewarding sites that genuinely deserved the authority that backlinks confer.

The Importance of a Diverse Link Profile

As Google’s algorithm grew more sophisticated, it also got better at spotting unnatural patterns. A website with thousands of backlinks all using the exact same keyword-rich anchor text looked manipulative and raised a red flag. In 2011, building a diverse and natural-looking link profile became critical. This meant acquiring links from a variety of sources, including industry blogs, news outlets, resource pages, and relevant directories. It also meant encouraging a mix of anchor text, including branded terms (e.g., “MEGA AI”), naked URLs (e.g., “www.gomega.ai”), and generic phrases (e.g., “click here”), in addition to target keywords. By understanding the impact of these changes, businesses could adapt their strategies to build a backlink profile that looked organic and could withstand future algorithm updates.

Adapting Your On-Page Optimization

The 2011 algorithm updates fundamentally changed the on-page optimization playbook. Before, it was often a technical exercise in placing keywords. After Panda, it became an art form centered on user experience. Google started rewarding pages that were not just keyword-relevant, but also well-structured, easy to read, and genuinely helpful. This meant marketers had to shift their focus from simply checking boxes to creating a seamless and valuable experience for the user, starting from the moment they see your page in the search results. On-page SEO became less about manipulation and more about clear communication with both people and search engine crawlers.

Best Practices for Title Tags

Your title tag is the first thing a user sees in the search results, making it a critical piece of your on-page strategy. A well-crafted title should act as a clear and compelling headline for your page. It needs to accurately reflect the content while including your primary keyword in a natural way, ideally toward the beginning. The goal is to create a title that not only informs search engines about your page’s topic but also persuades a potential visitor to click. Think of it as the promise you make to the searcher. Fulfilling that promise with great content is the next step to building trust and authority.

Writing Effective Meta Descriptions

While meta descriptions aren’t a direct ranking factor, they have a major influence on your click-through rate (CTR). Think of the meta description summarizes the page’s content and gives the user a compelling reason to click. It should be around 150-160 characters and naturally include relevant keywords. Avoid generic descriptions and instead, focus on highlighting the specific value a user will get from visiting your page. An effective meta description sets clear expectations and entices the right audience.

Structuring Your Content for Readability and SEO

The Panda update made it clear that how you structure your content matters. A wall of text is intimidating for readers and difficult for search engines to interpret. Breaking up your content with clear headings (H1, H2, H3), short paragraphs, and bullet points makes it much easier for users to scan and digest, which is how most people read online. This improved readability keeps people on your page longer, sending positive engagement signals to Google. For search engines, this structure creates a logical hierarchy, helping them understand the main topics and subtopics of your content.

Implementing HTML5

On a more technical note, the adoption of HTML5 provided new ways to signal content structure to search engines. HTML5 introduced semantic elements like <article>, <section>, and <nav>, which act as specific labels for different parts of a webpage. Using these elements correctly gives search engines clearer context about your content’s purpose. For example, wrapping your main blog post in an <article> tag tells Google exactly where the primary content lives. This is a more sophisticated approach than using generic <div> tags for everything and helps search engines better understand and categorize your information.

Guidelines for Using Keywords

The days of keyword stuffing ended abruptly in 2011. The new focus shifted to using keywords naturally within high-quality, relevant content. Instead of forcing keywords into sentences where they don’t belong, the goal is to write comprehensive content about a topic. When you do that, the right keywords and related phrases will appear organically. Over-optimizing can lead to penalties, so it’s essential to prioritize the user experience. Your content should be written for humans first. Modern SEO platforms can help you identify relevant keywords, but the real skill is weaving them into valuable content that answers a user’s query.

The Growing Importance of Local and Mobile Search

The rise of the smartphone changed how people accessed information, and Google’s algorithm began to change with it. In 2011, we saw the early signs of a major shift toward prioritizing search results based on a user’s location and the device they were using. This wasn’t just about showing a map; it was about understanding the context of a search. Someone looking for “pizza” on their phone was likely looking for a place to eat nearby, right now. This realization pushed local and mobile optimization from a niche tactic to a fundamental part of any effective SEO strategy. Businesses that adapted early gained a significant advantage by meeting customers where they were—on the go.

The Rise of Mobile Search

As smartphones became more common, so did searching from anywhere but a desk. Google’s algorithm updates started to reflect this reality, aiming to improve the quality of search results for a growing number of mobile users. While the official “mobile-friendly” update was still a few years away, the groundwork was being laid in 2011. Google began to recognize that a good user experience on a desktop didn’t always translate to a small screen. This trend signaled a clear need for websites to keep up with Google’s best practices. The focus was slowly but surely moving toward creating a seamless experience for users, no matter what device they were using to find information.

Integrating Local Search Signals

With the increase in mobile searches, a user’s physical location became a powerful signal of intent. Google started to place more weight on local search signals to deliver geographically relevant results. This meant that having a complete and accurate Google Places listing (the precursor to Google Business Profile) was suddenly critical. Businesses needed to ensure their name, address, and phone number (NAP) were consistent across the web. By understanding and adapting to these changes, website owners could ensure their sites performed well in Google’s ever-evolving search landscape. It was the beginning of local SEO as a distinct and necessary discipline for brick-and-mortar businesses.

Targeting by Geographic Location

Beyond just claiming a business listing, successful on-page strategy in 2011 started to involve targeting specific geographic locations. This meant creating content that was explicitly relevant to local customers. For example, a plumber would create pages for each city or neighborhood they served, using keywords like “emergency plumbing in Brooklyn.” This helped Google connect the business to location-based queries. Regularly updating content with local information and staying informed about algorithm updates became essential for maintaining and improving a website’s search engine rankings in local results. This approach made websites more useful to local searchers and sent clear relevance signals to Google.

Optimizing the Mobile User Experience

In 2011, optimizing for mobile was less about the sophisticated responsive designs we use now and more about basic usability. Google’s algorithm updates began to prioritize mobile usability, penalizing websites that were difficult to use on mobile devices. This meant avoiding technologies like Flash, which didn’t work on most smartphones, and ensuring text was readable without zooming. Many businesses opted to create separate mobile versions of their sites (like m.website.com) to provide a stripped-down, faster experience. Websites that consistently provided a user-friendly mobile experience tended to benefit from these early updates, setting the stage for the mobile-first indexing of the future.

Measuring What Matters: Analytics and Performance

The Panda update was a wake-up call for anyone who thought SEO was just about rankings and traffic volume. Suddenly, the quality of that traffic and how users interacted with a site became critically important. This shift forced a major change in how we measured success. It was no longer enough to see your site climb the search results; you had to prove that users who arrived were finding value. This made analytics the new cornerstone of any effective SEO strategy.

Understanding your website’s performance data became the key to survival and growth. Marketers had to move beyond vanity metrics and dig into the numbers that reflected user satisfaction and engagement. This meant tracking not just how many people visited, but what they did once they arrived. Did they stay and read? Did they click through to other pages? Or did they hit the back button immediately? Answering these questions with data was the only way to diagnose potential content issues and adapt to Google’s new standards. This focus on performance metrics laid the groundwork for the data-driven approach that defines modern SEO.

Defining Key Performance Indicators (KPIs)

Before 2011, the primary KPI for many SEO professionals was keyword ranking. After Panda, the definition of success expanded. While rankings still mattered, they became part of a larger picture. New KPIs emerged as crucial indicators of content quality and user experience. Metrics like bounce rate, average time on page, and pages per session became essential. A high bounce rate could signal that your content didn’t match search intent, while a low time on page suggested your content was unengaging or “thin.” Google’s algorithm updates forced marketers to adapt their measurement strategies to focus on what the algorithm now valued: a positive user experience.

Analyzing User Behavior

The Panda update made it clear that Google was getting better at interpreting user behavior as a signal of quality. This meant SEOs had to become amateur data analysts, using tools like Google Analytics to understand the user journey. By analyzing user flow reports, you could see the paths people took through your site and identify pages where they frequently dropped off. This analysis helped pinpoint weak content that needed improvement or removal. Understanding how real people interacted with your website provided the insights needed to create content that was not only optimized for search engines but also genuinely helpful and engaging for your audience.

Understanding Key Ranking Factors

Panda fundamentally changed the conversation around ranking factors. While technical elements and backlinks remained important, content quality was now front and center. The update specifically targeted sites with thin, duplicate, or low-quality content, effectively making content value a significant ranking factor. This meant that creating original, well-researched, and valuable articles was no longer just good practice; it was essential for SEO. Websites that invested in high-quality content saw their performance metrics improve, which in turn sent positive signals to Google, creating a cycle of sustained search visibility.

Implementing Analytics Correctly

Having access to data is only useful if that data is accurate. The increased focus on analytics meant that proper implementation became more important than ever. A simple misconfiguration in Google Analytics, like failing to filter out internal office traffic or not setting up conversion goals correctly, could skew your data and lead to poor strategic decisions. Ensuring your analytics platform was set up to deliver clean, reliable information was the first step toward making informed choices. This foundational work allowed you to accurately understand the impact of algorithm updates and measure the true performance of your SEO efforts.

Using Tools to Track Performance

To navigate the post-Panda landscape, marketers relied on a growing set of tools to track performance. Google Webmaster Tools (now Google Search Console) and Google Analytics were the essential free resources, providing direct insight into how Google viewed a site and how users interacted with it. Alongside these, paid platforms like Moz and SEMrush gained popularity by offering more in-depth competitive analysis and rank tracking features. These tools provided the necessary data to diagnose issues, monitor progress, and demonstrate the value of investing in quality content. Today, automated SEO platforms build on these principles, simplifying the complex task of tracking performance across countless metrics.

Lasting Lessons from the 2011 SEO Landscape

The shifts in 2011 weren’t just temporary hurdles; they were foundational changes that set the stage for modern SEO. The core principles that emerged from updates like Panda are more relevant than ever. Understanding these lessons helps clarify why certain strategies work today and how to build a resilient approach to search that can withstand future algorithm updates. The focus moved from technical loopholes to a more holistic, user-centered methodology that rewards quality, authority, and a positive experience. These are the enduring takeaways that every marketer should carry forward.

The Constant Evolution of Algorithms

If 2011 taught us anything, it’s that the only constant in SEO is change. Google’s algorithm updates are not one-off events but a continuous process of refinement. This means an SEO strategy can never be “set and forget.” The Panda update showed that tactics that worked one day could become obsolete the next. This established the need for marketers to remain adaptable and informed. Staying on top of these shifts requires a commitment to continuous learning and a willingness to pivot your strategy. Modern tools can help automate article updates to keep your content aligned with the latest best practices, ensuring your rankings remain stable through algorithmic turbulence.

The Shift Toward User Intent

The Panda update marked a significant pivot from simply matching keywords to understanding user intent. Before 2011, it was common to see low-quality pages ranking well just by stuffing them with relevant keywords. Panda aimed to demote this type of thin, unhelpful content. It forced website owners to ask a more important question: “What is the searcher actually trying to accomplish?” This change rewarded websites with original, valuable information that genuinely addressed a user’s query. This was the beginning of the shift toward creating content for people first and search engines second, a principle that remains at the heart of effective search engine optimization.

The Enduring Need for Quality

Following the Panda update, “quality content” became the mantra for SEO professionals, and for good reason. High-quality content is the foundation of any successful online strategy. In 2011, this meant moving away from duplicate or auto-generated text and toward creating well-researched, comprehensive, and engaging articles. This principle has only grown stronger over time. Quality content improves search rankings, captivates your audience, builds trust, and encourages social sharing. It’s the most sustainable way to earn high-quality backlinks and establish your site as an authority in its niche. The lesson is simple: invest in creating the best possible resource for your audience, and the rankings will follow.

Preparing for Cross-Platform Search

The changes in 2011 also underscored the need for a flexible strategy that works beyond a single platform. As mobile search began to rise, it became clear that a great user experience was universal. The focus on quality content and clean site architecture made websites more adaptable to different devices and, eventually, different types of search, like voice and AI. This forward-thinking approach is crucial. By building a strong foundation based on Google’s best practices, you inherently prepare your site for future technologies. An effective content strategy ensures your information is valuable and accessible, no matter how or where your audience is searching.

Related Articles

- New Google Algorithm Update: Is Your SEO Strategy Safe?

- Google Panda Penalty Recovery Guide 2024

- Google Algorithm Updates: A 2024 SEO Guide

Frequently Asked Questions

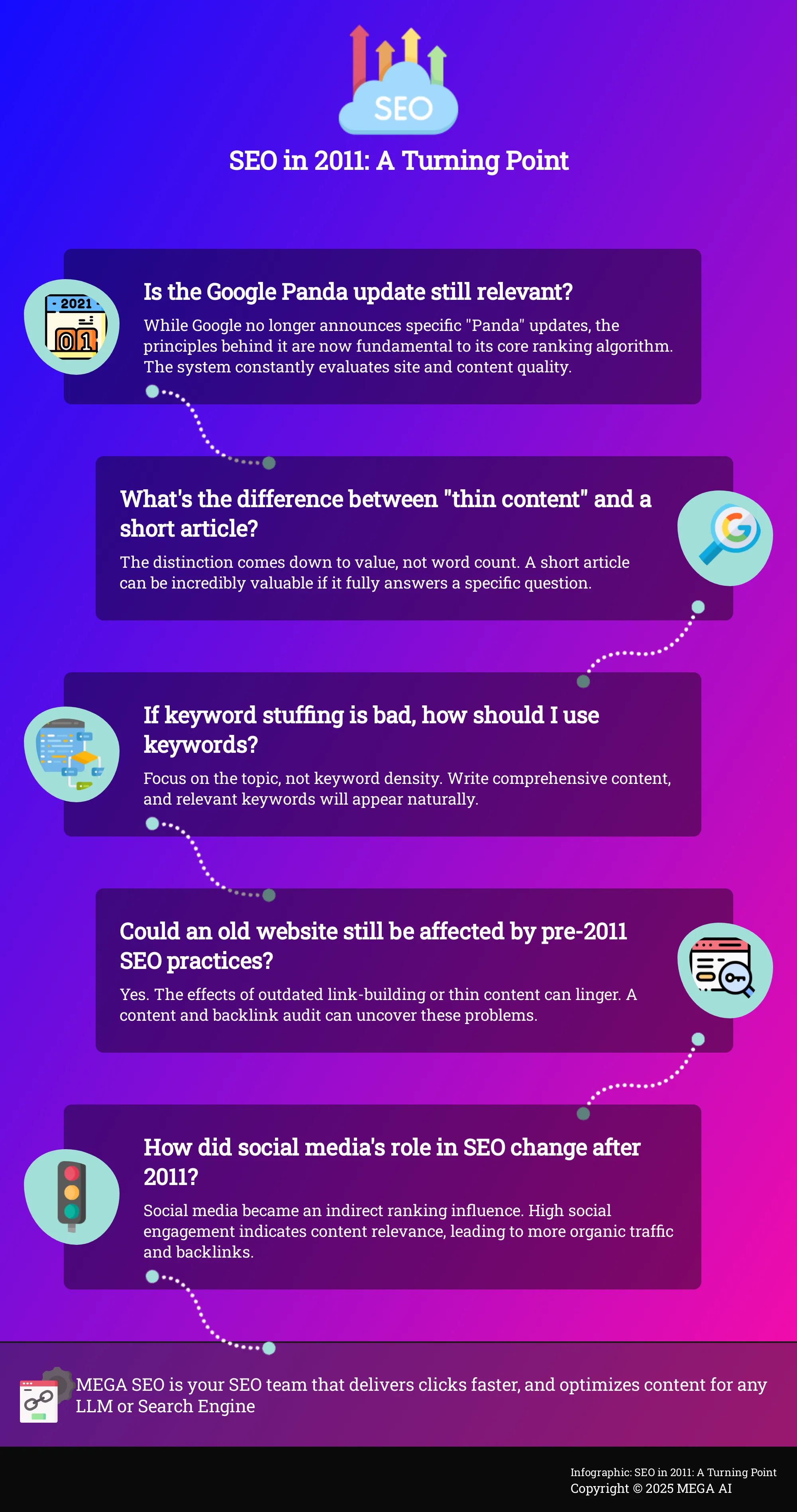

Is the Google Panda update still a factor today? While Google no longer announces specific “Panda” updates, the principles behind it are now a fundamental part of its core ranking algorithm. The system is constantly evaluating site and content quality. So, while you won’t get hit by a distinct Panda penalty, the core lesson from 2011 remains: websites with thin, low-value content will consistently struggle to rank well against sites that provide genuine value to users.

What’s the difference between “thin content” and just a short article? The distinction comes down to value, not word count. A short article that directly and completely answers a very specific question can be incredibly valuable to a user. Thin content, on the other hand, offers little to no real substance. It might be a page with a few generic sentences, duplicate text from another source, or content that doesn’t actually answer the question promised in the title. The key is whether the user leaves satisfied.

If keyword stuffing is bad, how should I use keywords in my content? The best approach is to focus on the topic first and the keywords second. When you write a comprehensive, helpful article that thoroughly covers a subject, you will naturally use the primary keyword and many related terms and phrases. Think about answering the user’s query completely rather than trying to fit a keyword into a sentence a certain number of times. Your priority should always be clear, natural language that serves the reader.

My website is old. Could it still be negatively affected by practices that were common before 2011? Yes, it’s entirely possible. Search engines have a long memory, and the effects of old, low-quality link-building tactics or pages of thin, keyword-stuffed content can linger for years. If an older site has never been audited or updated, it may be held back by these legacy issues. A thorough content and backlink audit can often uncover these problems and set a path for recovery.

How did social media’s role in SEO change after 2011? After 2011, social media became a powerful indirect influence on search rankings. While a “like” or a “share” isn’t a direct ranking signal like a backlink, high social engagement became a strong indicator of content relevance and popularity. Content that performed well on social platforms was seen by more people, which in turn led to more organic traffic and a higher likelihood of earning valuable, natural backlinks from other websites.