You’ve published a new blog post, but it’s nowhere to be found on Google. Frustrating, right? It likely comes down to Google’s indexing process. Knowing how long does Google indexing take is crucial for online success. This post breaks down the process, exploring factors influencing indexing speed and offering actionable strategies to get your content indexed faster. We’ll cover everything from site structure and content quality to XML sitemaps and Google Search Console, helping you understand how long Google takes to index your site and what you can do to speed things up.

Key Takeaways

- Indexing is essential for visibility: If Google can’t find your content, neither can your customers. Prioritize indexing as a cornerstone of your SEO strategy.

- Many factors affect indexing speed: From site structure and content quality to technical SEO, optimizing these elements helps Google find and index your content faster. Regular site audits are key.

- Maintain your site’s indexing health: Fresh content, strong site performance, and adherence to Google’s guidelines ensure long-term visibility. Use tools like Google Search Console and MEGA SEO to stay on top of your site’s health.

Google Indexing: What It Is & Why It Matters

Imagine Google as a massive library filled with billions of books (web pages). For someone to find your book, the library needs to know it exists, categorize it, and place it on a shelf. That’s essentially what Google indexing is. It’s the process where Google discovers, analyzes, and stores information about your

This process involves a few key steps. First, Google’s automated bots, known as Googlebot, discover your web page. Think of this as the library acquiring your book. Next, Googlebot crawls your page, analyzing the content, images, and code to understand what it’s about—like a librarian carefully categorizing the book. Finally, Google renders the page, figuring out how it looks and functions for users, ensuring the experience matches what searchers expect.

Why does this matter? Simply put, if your site isn’t indexed, it’s invisible to searchers. Google can’t show what it doesn’t know exists. Indexing is fundamental to your website’s visibility and the traffic it receives. It directly impacts how and when your website appears in search results, influencing your online presence and reach. If Google can’t find and understand your content, potential customers won’t either. A well-indexed site is crucial for attracting organic traffic and achieving a strong online presence. So, ensuring your website is properly indexed is the first step to a successful SEO strategy. Consider exploring MEGA SEO’s free tools to help improve your site’s visibility and searchability.

How Long Does Google Indexing Take?

Getting your website noticed by Google is a crucial first step for any online business. But how long does it actually take for Google to index your site and its pages so they can show up in search results? There’s no single magic number, but understanding the process and the factors involved can help you manage expectations and optimize your strategy.

The Three Stages of Indexing (Discovery, Crawling, Rendering)

Google’s indexing process, the key to your website’s visibility, happens in three main stages: discovery, crawling, and rendering. Think of it as Google getting to know your website. During discovery, Googlebot finds your page, perhaps through a link from another site or your submitted sitemap. This is like Googlebot stumbling upon your book in a vast library. The next step, crawling, involves Googlebot examining your page’s content, images, and code, much like a librarian categorizing a new book. Finally, Googlebot renders the page, processing the JavaScript and CSS to understand how it looks and functions for users. This ensures the user experience aligns with what searchers expect when they click on your link in the search results.

Is Indexing Guaranteed?

You’ve poured your heart into creating stellar content, but sadly, even high-quality content isn’t guaranteed to be indexed. Google’s algorithms are complex and consider numerous factors when deciding which pages get indexed and how quickly. Think of it like this: just because a library acquires a book doesn’t mean it’ll immediately be available on the shelves. Factors like site structure, technical SEO issues, and the overall authority of your website all play a role. While quality content is essential, it’s not the only factor influencing Google’s decision.

Anecdotal Evidence of Increased Indexing Times

While Google strives for efficiency, the reality is that indexing times can fluctuate. Recent discussions among website owners suggest that indexing times may have increased for some. Several users have reported longer wait times than in the past, leading to some frustration as they wait for their new content to appear in search results. This highlights the importance of patience and proactive SEO practices. While you can’t control Google’s timeline, you can optimize your site to make it as easy as possible for Googlebot to discover, crawl, and index your content efficiently. Using tools like MEGA SEO can help automate and streamline these processes, giving you a competitive edge in the search landscape.

What Factors Affect Indexing Time?

Several factors influence how quickly Google indexes your website. Think of it like a librarian cataloging a massive library – the size and organization of the library, the uniqueness of the books, and how easily the librarian can access the shelves all play a role. Similarly, factors like these affect Google’s indexing speed:

- Site size and complexity: A small website with a few pages will naturally get indexed faster than a large, complex site with thousands of pages. Conductor notes that smaller sites can be indexed in 4–6 weeks, while larger sites can take more than 6 months.

- Content similarity: If your content is very similar to other content already on the web, Google might take longer to index it or might not index it at all, especially if your site is new or has low authority. Duplicate content can also be a factor. Google prioritizes original content, so make sure yours stands out.

- Crawl process: Google’s automated bots, called “crawlers,” discover and index your web pages. How frequently Google crawls your site depends on factors like your site’s update frequency and authority. A well-structured site makes it easier for crawlers to do their job.

- Content quality: High-quality, original content gets indexed more quickly. Google wants to provide its users with the best possible search results, so it prioritizes valuable content. Check out MEGA SEO’s content generation tools to help create compelling, original content.

Crawl Budget: How Much Time Does Google Have for Your Site?

Googlebot has a limited amount of time to spend crawling each website. This is known as the crawl budget. Think of it as a librarian having a set number of hours each day to catalog new books. A larger website with more pages and a complex structure will require a larger crawl budget than a smaller, simpler site. Optimizing your site’s structure and internal linking can help Googlebot use its crawl budget efficiently, ensuring it focuses on the most important pages. A site with frequent server errors or slow loading times will waste Googlebot’s time, impacting the number of pages indexed.

The indexing process involves three main steps: discovery (Googlebot finds your page), crawling (Googlebot examines your page), and rendering (Googlebot processes the page’s content and structure). Each of these steps consumes part of your crawl budget.

Crawl Demand: Which Pages are Most Important?

Not all pages are created equal in the eyes of Google. Crawl demand, a key factor influencing indexing speed, signifies how much Google *wants* to crawl your site. Pages that are frequently updated, receive a lot of traffic, or have many high-quality backlinks signal to Google that they are important. Prioritizing these high-demand pages through internal linking and sitemaps can speed up the indexing of your most valuable content. Frequently updating your content and building high-quality backlinks can increase crawl demand.

Website Quality: Beyond Just Content

While fresh, high-quality content is essential for attracting visitors and ranking well, it also plays a role in indexing speed. A website with well-structured, original content is more likely to be indexed quickly. Conversely, sites with thin content, duplicate content, or a confusing navigation structure can deter Googlebot. This can slow down the indexing process. MEGA SEO’s free SEO tools can help you analyze your content and identify areas for improvement. A well-structured site with a clear navigation hierarchy makes it easier for Googlebot to discover and index all your pages.

Server Issues: Downtime and Errors

A slow or overloaded server can significantly impact Googlebot’s ability to crawl your site. Downtime and server errors frustrate users and signal to Google that your site is unreliable. This can lead to a lower crawl frequency and slower indexing. Regularly monitoring your server performance and addressing any technical issues is crucial for maintaining a healthy crawl budget. A reliable hosting provider and a well-optimized website can minimize downtime and errors.

Content Similarity: Challenges for Large Sites

For large websites with thousands of pages, content similarity can pose a significant challenge for indexing. If a large portion of your content is very similar, Google may see it as less valuable. Google may then prioritize crawling and indexing other, more unique content. This is especially true for new sites or those with low authority. Focusing on creating diverse, original content for each page is essential for large sites to maximize their indexing coverage. Tools like Copyscape can help you identify and address duplicate content issues.

Website Authority: A Key Factor

Website authority is a measure of your site’s credibility and trustworthiness in Google’s eyes. It plays a significant role in indexing speed. High-authority websites, typically those with a strong backlink profile and a history of publishing quality content, are crawled and indexed more frequently. Building authority takes time and effort, but it’s a worthwhile investment for long-term SEO success. Consider using MEGA SEO’s automated link-building tools to help improve your site’s authority.

Topic Competitiveness: Indexing in Crowded Niches

The competitiveness of your niche can also influence indexing time. In highly competitive niches, where many websites are vying for the same keywords, Google may take longer to index new content. Regularly creating high-quality content with relevant keywords is key for faster indexing. This is because Google wants to provide the most up-to-date and relevant information to its users. Focusing on creating unique, valuable content that caters to the specific needs of your target audience can help you stand out. This will also improve your chances of getting indexed quickly. Conduct thorough keyword research to identify relevant terms with lower competition.

Average Indexing Time for New Sites & Pages

While indexing times vary, some general timeframes can give you a benchmark. Keep in mind these are averages, and your experience might differ:

- New websites: Local Digital suggests that getting a new website indexed can take anywhere from a few days to a few months, with most sites indexed within four days. However, complete indexing and appearance in search results usually takes 2-3 weeks.

- New pages: StoryChief reports that 83% of pages are indexed within the first week of publication. This suggests that most new pages get indexed relatively quickly.

The size of your website also plays a significant role. Conductor provides these general estimates:

- Small websites (under 500 pages): Typically 3-4 weeks.

- Medium websites (500-25,000 pages): 2-3 months.

- Large websites (over 25,000 pages): 4-12 months.

Remember, these are just estimates. Using tools like MEGA SEO can help streamline the process and potentially speed up indexing by automating key SEO tasks and ensuring your site follows best practices. You can learn more about how MEGA SEO can help by booking a demo or exploring our free tools.

How Google Crawls & Indexes Your Website

Getting your website noticed by Google involves a fascinating process. It’s not magic, but a systematic approach Google uses to discover, understand, and organize web pages. This process is what we call crawling and indexing. Think of it as Google creating a massive library of web pages—indexing is like adding your website to its catalog. Once indexed, your site can appear in search results, making it visible to potential customers.

How Does Google Discover New Content?

Google discovers new content through a multi-step process. First, Googlebot, Google’s automated web crawler, finds your web page. This discovery phase often happens through links from other websites. Think of it like following a trail of breadcrumbs across the web. Once Googlebot locates your page, the crawling process begins. During crawling, Googlebot analyzes the content, looking at the text, images, and other elements to understand what your site is about. Finally, Googlebot renders the page. This allows Googlebot to see how the page appears to users, including how it looks and functions. This complete picture helps Google understand the user experience your site offers.

Googlebot’s Role in Indexing

Googlebot is essential to the indexing process. After discovering your site, Googlebot crawls it to analyze the content and structure. This information is then used to build an index, which is essentially a giant database of web pages. This index is what Google uses to serve up relevant results when someone performs a search. The time it takes for a website to be indexed can vary, depending on factors like website size and content quality. A well-structured website with high-quality, unique content is more likely to be indexed quickly.

What Affects Google Indexing Speed?

Several factors influence how quickly Google indexes your website. Understanding these elements can help you optimize your site for faster indexing and improved visibility in search results. Let’s explore some key factors:

Website Age & Authority

Newer websites generally take longer to get indexed than established sites with a proven track record. Think of it like building trust—Google needs time to assess the quality and relevance of a new site. A site’s authority, built over time through high-quality content and strong backlinks, can significantly expedite the indexing process. Essentially, Google prioritizes websites it deems trustworthy and authoritative. You can learn more about how long it takes Google to index your site from resources like Local Digital.

Site Structure & Internal Linking

A well-organized website with clear, logical internal linking makes it easier for Googlebot, Google’s web crawler, to discover and index your pages. A good site structure is like a well-designed roadmap, guiding Googlebot through your content efficiently. Conversely, a poorly structured site with broken links or confusing navigation can hinder the crawling process, slowing down indexing. Technical issues like incorrect use of noindex tags or errors in your robots.txt file can also block pages from being indexed.

Content Quality and Uniqueness

Google prioritizes fresh, original content. High-quality, unique content signals to Google that your website offers value to users, increasing the likelihood of faster indexing. Thin content, duplicate content, or content scraped from other sites is less likely to be indexed quickly, or at all. StoryChief offers helpful advice on creating engaging, indexable content. Consider using MEGA SEO’s content generation tools to ensure your content is original and optimized for search engines.

XML Sitemaps & Their Impact

An XML sitemap acts as a guide for search engines, providing a comprehensive list of all the pages on your website. Submitting an XML sitemap through Google Search Console helps Google discover and index your content more efficiently, especially for larger websites with complex structures. Think of it as handing Google a detailed map of your website, making it easier for them to find all your important pages. Conductor provides further information on the importance of XML sitemaps for Google indexing. For automated sitemap generation and management, explore MEGA SEO’s free tools.

Server Response Times & Site Speed

A slow-loading website can negatively impact your Google indexing speed. Googlebot has a limited crawl budget, meaning it allocates a specific amount of time to crawl each website. If your server is slow or your pages take too long to load, Googlebot may not be able to crawl and index all your content effectively. Optimizing your website’s performance and ensuring fast server response times are crucial for efficient indexing. StoryChief also discusses the impact of site speed on indexing. You can leverage MEGA SEO’s technical SEO features to improve your website’s performance and speed.

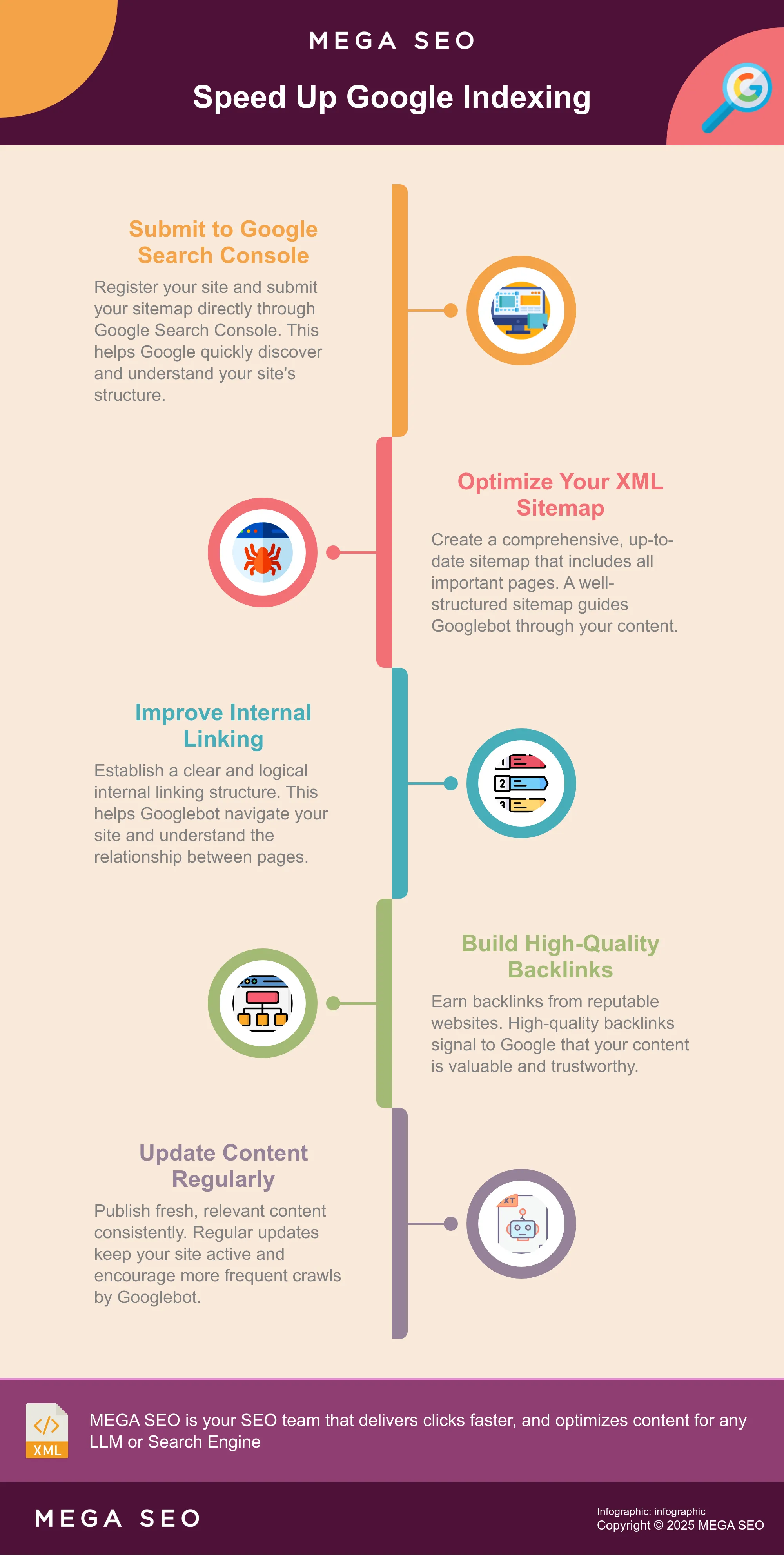

How to Speed Up Google Indexing

Getting your website indexed by Google quickly is crucial for online visibility. While there’s no magic bullet, several strategies can help expedite the process. Here’s how to make it easier for Google to find and index your content:

Submit Your Site to Google Search ConsoleGoogle Search Console

Google Search Console is your central hub for understanding how Google sees your website. Submitting your sitemap directly through Google Search Console can significantly reduce the time it takes for your pages to get indexed. It also offers valuable data on crawl errors and indexing issues, allowing you to address any roadblocks and improve your site’s overall SEO health. Use the platform to monitor your site’s indexing status and identify areas for improvement.

Create & Optimize XML Sitemaps

An XML sitemap acts as a roadmap for search engines, guiding them through all the important pages on your website. A well-structured sitemap ensures Googlebot can discover and crawl your content efficiently. Make sure your sitemap is up-to-date and accurately reflects your site’s structure, including new pages as you add them. Submitting your sitemap through Google Search Console streamlines the indexing process.

Ensure Your Sitemap is Accurate and Up-to-Date

A well-structured sitemap ensures Googlebot can discover and crawl your content efficiently. Think of your sitemap as a dynamic document, not a static one. It needs to accurately reflect the current state of your website. An outdated sitemap can mislead search engines and prevent them from indexing your latest content. Regularly update your sitemap, especially after adding new pages or making significant changes to your site structure. This ensures that Google has the most current information about your website, facilitating faster and more accurate indexing. Conductor’s research highlighted how an up-to-date XML sitemap is crucial for efficient indexing, particularly for larger websites.

Include All Important Pages in Your Sitemap

Your XML sitemap acts as a guide for search engines, providing a comprehensive list of all the important pages on your website. It’s essential to ensure your sitemap includes *all* pages you want Google to index. This is especially important for eCommerce sites or sites with large archives. Omitting key pages can lead to those pages being overlooked by search engines, impacting their visibility and findability. A comprehensive sitemap helps Google understand the overall structure and content of your site, leading to more effective crawling and indexing. MEGA SEO’s guide to Google Search Console emphasizes that a well-maintained sitemap is a cornerstone of a successful SEO strategy. Make sure your sitemap isn’t just accurate and up-to-date, but also thorough in its inclusion of all relevant pages. Consider using MEGA SEO’s free tools to help manage and optimize your sitemap.

Improve Your Internal Linking Structure

A clear and logical internal linking structure is essential for both user experience and SEO. Internal links connect your pages, making it easier for Googlebot to crawl your site and understand the relationship between different pieces of content. Think of it as creating a well-organized library where Google can easily find and categorize all your books (web pages). A well-planned site architecture, supported by a robust internal linking strategy, leads to faster indexing and improved discoverability. Learn more about internal linking best practices.

How Internal Linking Improves Site Structure

A clear and logical internal linking structure is essential for both user experience and SEO. Internal links connect your pages, making it easier for Googlebot to crawl your site and understand the relationship between different pieces of content. Think of it as creating a well-organized library where Google can easily find and categorize all your books (web pages). A well-planned site architecture, supported by a robust internal linking strategy, leads to faster indexing and improved discoverability. This also improves crawling efficiency, as Googlebot can easily move from one page to another, discovering and indexing your content more effectively. For more information on how Google interacts with your site’s structure, check out this guide.

When your site is easy for Googlebot to access, it directly impacts how quickly your pages get indexed. A well-structured site is like a well-designed roadmap, guiding Googlebot through your content efficiently. Conversely, a poorly structured site with broken links or confusing navigation can hinder the crawling process, slowing down indexing. Consider using a tool like MEGA SEO to automate the process of building a strong internal linking structure, ensuring your site is easy for both users and search engines to crawl. You can schedule a demo to learn more.

Generate High-Quality Backlinks

Earning backlinks from reputable websites is a strong signal to Google that your content is valuable and trustworthy. High-quality backlinks not only improve your search rankings but also increase the likelihood of faster indexing. Focus on building relationships with other websites in your niche and creating high-quality content that naturally attracts links. Resources like Ahrefs can help you analyze and improve your backlink profile.

Update Your Content Regularly

Fresh, relevant content keeps Google coming back for more. Regularly updating your existing content and adding new pages shows Google that your site is active and provides valuable information to users. This can lead to more frequent crawls and faster indexing of new and updated pages. A consistent publishing schedule is key for maintaining a healthy and dynamic website that ranks well in search results.

Eliminate Infinite Crawl Spaces

Picture Googlebot as a diligent librarian trying to organize a vast library. A well-structured website acts as a clear floor plan, guiding the librarian (Googlebot) smoothly through each section (page). A disorganized website creates infinite crawl spaces—like endless stacks of books in a chaotic library—making it difficult for Googlebot to find and index all your content. By streamlining your site’s structure and ensuring clear navigation, you create a more efficient path for Googlebot, leading to faster indexing and improved visibility. A well-structured site is like a well-designed roadmap, guiding Googlebot through your content efficiently.

Disallow Irrelevant Pages in robots.txt

Your robots.txt file acts as a gatekeeper for search engine crawlers. It tells Googlebot which parts of your site to access and which to ignore. By strategically using the disallow directive in your robots.txt file, you can prevent Googlebot from wasting time crawling irrelevant pages, such as administrative areas or thank you pages. This focuses Googlebot’s attention on your important content, optimizing crawl efficiency and potentially speeding up indexing for the pages that matter most. Technical issues like incorrect use of noindex tags or errors in your robots.txt file can also block pages from being indexed. For automated assistance with technical SEO, including managing your robots.txt, explore MEGA SEO.

Merge Duplicate Content

Duplicate content can confuse search engines. If you have multiple pages with very similar content, Google may struggle to determine which version is the most relevant. This can lead to slower indexing or even complete omission from search results. Consolidating duplicate content into a single, authoritative page strengthens your site’s overall SEO and improves the user experience. As Conductor explains, Google prioritizes original content. MEGA SEO’s content audit tools can help you identify and merge duplicate content, ensuring your site presents a clear and consistent message.

Prerender JavaScript Pages and Dynamic Content

Modern websites often rely on JavaScript to create dynamic and interactive experiences. However, Googlebot can sometimes struggle to process JavaScript-heavy content, which can delay indexing. Prerendering your JavaScript pages ensures that Googlebot receives a fully rendered HTML version of your content, making it easier to understand and index. This is particularly important for websites with complex user interfaces or dynamic content that relies heavily on JavaScript. Googlebot analyzes the text, images, and other elements to understand your site. Prerendering can improve your site’s visibility and ensure that all your content is accessible to search engines.

Leverage the Google Indexing API (For Applicable Content)

For websites with frequently updated content, such as news sites or e-commerce platforms, the Google Indexing API can be a powerful tool for expediting the indexing process. The API allows you to directly notify Google about new and updated content, bypassing the need to wait for Googlebot to crawl your site. This is especially useful for time-sensitive content where immediate indexing is crucial. Using tools like Google Search Console can help streamline the process. More information about the Google Indexing API is available in our resources section.

Using MEGA SEO for Automated Indexing and Content Optimization

MEGA SEO offers tools to streamline and automate website optimization for Google indexing. From automated sitemap generation to content optimization and link building, MEGA SEO simplifies complex SEO tasks, making it easier to improve your site’s visibility and search rankings. An XML sitemap acts as a guide for search engines, providing a comprehensive list of all the pages on your website.

Automated Sitemap Generation and Submission

Submitting your sitemap through Google Search Console can significantly reduce indexing time. MEGA SEO automates sitemap generation and submission, ensuring your content is easily discoverable. This saves you time and effort.

Content Optimization for Improved Crawlability

High-quality, original content is more likely to be indexed quickly and rank well. MEGA SEO’s content optimization tools help you create compelling, SEO-friendly content. High-quality content gets indexed more quickly. From keyword research to content structuring and readability analysis, MEGA SEO ensures your content is optimized for both crawlability and user engagement.

Internal and External Link Building

A strong link profile is essential for website authority and visibility. MEGA SEO’s link-building tools help you identify opportunities for building high-quality internal and external links. Earning backlinks from reputable websites signals to Google that your content is valuable. By automating link building, MEGA SEO strengthens your site’s authority and improves its indexing chances.

Why Is My Site Indexing Slowly?

If your site isn’t indexing as quickly as you’d like, several factors could be at play. Let’s explore some common culprits and how to address them.

Technical Issues Affecting Crawlability

Technical glitches can significantly hinder Googlebot’s ability to crawl your website. Server errors, for example, can make your pages inaccessible, preventing Google from indexing them. Similarly, incorrect directives in your robots.txt file can unintentionally block Googlebot from accessing important sections. Regularly check your server logs for errors and review your robots.txt file to ensure it’s configured correctly. MEGA SEO’s automated site audit can help streamline this process, identifying and flagging potential crawl issues.

Low-Quality or Duplicate Content

Google prioritizes high-quality, original content. If your site contains thin, duplicate, or low-value content, Google may deem it less important and delay indexing. Focus on creating informative, engaging, and unique content that caters to your target audience. Conduct regular content audits to identify and address any duplicate content issues. MEGA SEO’s Content Generation features can assist in creating fresh, original content, while the Maintenance Agent can help optimize existing content for quality and relevance.

Insufficient Site Authority

Newer websites often take longer to get indexed compared to established, authoritative sites. Building site authority takes time and involves creating high-quality content and earning backlinks from reputable sources. Focus on developing a strong internal linking structure and actively pursue backlink opportunities to improve your site’s authority. MEGA SEO can automate both internal and external linking strategies, helping you build authority over time.

Noindex Tags & Robots.txt: Common Mistakes

While noindex tags and robots.txt directives are valuable tools for controlling which pages get indexed, incorrect implementation can inadvertently slow down your site’s indexing. Double-check that you haven’t accidentally applied noindex tags to pages you want indexed. Also, review your robots.txt file to ensure it’s not blocking Googlebot from accessing critical content. MEGA SEO can help manage these technical aspects, ensuring your directives are correctly implemented and aligned with your indexing goals.

Tools to Monitor & Track Google Indexing

Knowing how long Google indexing takes is only half the battle. You also need tools to monitor the process and troubleshoot any issues. Thankfully, several resources can help you keep tabs on your site’s indexing status.

Google Search Console Features

Google Search Console is your primary resource for understanding how Google views your website. It offers a suite of tools to monitor and track your indexing status. You can submit sitemaps directly through Search Console, informing Google about all the pages on your site you want indexed. Search Console also provides invaluable insights into why specific pages might not be indexed, helping you address any underlying problems. If you’ve updated your website, you can request a recrawl through Search Console to refresh Google’s search results. This isn’t an automatic process, so taking this step is crucial for ensuring Google has the latest version of your content. The URL Inspection Tool lets you check when Google last crawled specific URLs and allows you to submit them to Google’s crawl queue, which can help speed up indexing.

Third-Party Indexing Tools

While Google Search Console is the gold standard, some third-party SEO tools offer additional features for monitoring indexing. Some users recommend tools like Rank Math for instant indexing, but remember that no tool can guarantee immediate results. Google controls the indexing process. Focus on creating high-quality, easily accessible content, and use these tools to track progress and identify potential roadblocks. Managing expectations and prioritizing a solid content strategy is key to long-term SEO success.

Troubleshooting Indexing Issues

Running into indexing problems can be frustrating. Here’s a breakdown of common issues and how to address them:

Identify & Fix Crawl Errors

Crawl errors happen when Googlebot can’t access your web pages. This can be due to several reasons, and fixing them is crucial for getting your content indexed. First, double-check that your pages aren’t blocked by noindex tags or directives in your robots.txt file. These act as roadblocks for search engine crawlers. A well-structured website that’s easy for Googlebot to crawl will generally index faster. Use MEGA SEO’s site audit tools to identify and fix these issues quickly. Our platform can automatically update your robots.txt file and remove any accidental noindex tags, ensuring Googlebot can access and index your content. You can learn more about crawl errors and how to fix them in our SEO resources.

Address Manual Actions & Penalties

Sometimes, Google issues manual actions or penalties against websites that violate their guidelines. These penalties can significantly impact indexing. If you suspect a penalty, check Google Search Console for messages about manual actions. Addressing these issues promptly is key to getting your site back on track. MEGA SEO can help you identify potential problems and guide you through the process of fixing them and submitting a reconsideration request to Google. Book a demo to see how MEGA SEO can simplify this process.

Request Recrawls for Updated Content

If you’ve made significant updates to existing content or added new pages, you can request a recrawl through Google Search Console. This signals to Google that your site has fresh content to index. The URL Inspection tool in Search Console is your go-to resource for this. Keep in mind, there’s a limit to how many pages you can submit this way. Repeatedly requesting a recrawl for the same page won’t make things faster. MEGA SEO’s Maintenance Agent can automate content updates and automatically request recrawls, streamlining this process and saving you time. Try our free tools to get started.

Specific Steps to Request Indexing in Google Search Console

Google Search Console (GSC) is your primary tool for communicating with Google about your website’s indexing. Here’s how to request indexing:

- Use the URL Inspection Tool: Log in to your GSC account and paste the URL you want indexed into the URL inspection tool at the top of the page.

- Check Indexing Status: The tool will show you the page’s current indexing status. If it’s not indexed, you’ll see a “Request Indexing” option.

- Request Indexing: Click “Request Indexing.” Google will add your URL to its crawl queue. This doesn’t guarantee instant indexing, but it signals Google to prioritize your page.

- Test the Live URL: After requesting indexing, select “Test Live URL” in the URL inspection tool. This tests Google’s ability to access and render the page. Address any errors found, as they’re crucial for successful indexing.

- Monitor Your Request: GSC provides updates on your request’s status. Check back periodically to see if Google indexed the page. Indexing takes time, so be patient.

While GSC is powerful, repeatedly requesting indexing for the same page won’t help. Focus on creating high-quality content and a technically sound site. Explore MEGA SEO’s tools for automated content optimization and technical SEO improvements.

Maintain Good Indexing Health

Once your site is indexed, maintaining its health is an ongoing process. Think of it like tending a garden—consistent care ensures continued growth and visibility. Here’s how to keep your site in top shape for Google:

Regular Content Updates & Audits

Fresh, high-quality content is key for SEO success. Regularly updating your content keeps it relevant for users and signals to Google that your site is active. This can lead to more frequent indexing and better rankings. Auditing your content periodically also helps identify areas for improvement, ensuring all your pages align with current SEO best practices and user search intent. Consider updating older blog posts, refreshing statistics, or adding new insights to keep your content current and engaging. A strong link profile is also essential, so regularly review your backlinks and disavow any low-quality links. Use MEGA SEO’s automated content generation features to create high-quality, optimized content that will engage your audience and improve your search rankings.

Monitor & Improve Site Performance

A slow website can hinder Googlebot’s ability to crawl your pages efficiently. Regularly check your website speed and address any performance issues. A slow server response time can significantly impact indexing, as Googlebot may timeout before fully crawling your site. Use tools like Google PageSpeed Insights to identify areas for improvement, such as optimizing images and minimizing HTTP requests. A fast-loading site not only improves indexing but also enhances user experience, leading to lower bounce rates. Schedule a demo to see how MEGA SEO can help you monitor and improve your site’s performance.

Stay Up-to-Date with Google’s Guidelines

Google’s algorithms and best practices are constantly evolving. Staying informed about these changes is crucial for maintaining good indexing health. Subscribe to Google’s official blog and follow industry experts to stay ahead of algorithm updates and understand how they might affect your site. Adhering to Google’s guidelines ensures your content meets their quality standards, which can positively impact your site’s visibility. MEGA SEO’s Maintenance Agent can automatically update your content to align with the latest algorithm changes, saving you time and effort while ensuring your site remains optimized. Explore MEGA SEO’s resources to learn more about maintaining good indexing health.

Related Articles

- Exploring the Powerhouse Domains: Insights from an SEO Enthusiast’s Massive Google Search Experiment – MEGA SEO | Blog

- Learn How to Structure Your Website to Improve Search Engine Crawlability and Indexing: Advanced Technical SEO Techniques – MEGA SEO | Blog

- Getting Started with Google Search Console: An Overview, Importance, and Setup Guide – MEGA SEO | Blog

- Mastering Mobile Page Speed: Essential Strategies for SEO and User Satisfaction – MEGA SEO | Blog

- Google’s Dominance Exposed: Implications and Strategies for Startups in 2024 – MEGA SEO | Blog

Frequently Asked Questions

Why is Google indexing important for my website? Google indexing is how Google finds, understands, and organizes your web pages. Without it, your site is effectively invisible to searchers. When your site is indexed, it becomes part of Google’s vast library of web pages, allowing it to appear in search results and reach a wider audience. This visibility is essential for attracting organic traffic and growing your online presence.

How can I tell if my site is indexed by Google? The simplest way to check is to perform a “site:” search in Google. Type “site:yourwebsite.com” (replace “yourwebsite.com” with your actual domain) into the Google search bar. If your site is indexed, you’ll see a list of pages from your website in the search results. If nothing shows up, your site likely isn’t indexed. You can also use Google Search Console for more detailed information about your site’s indexing status.

What can I do if my site isn’t indexing properly? Several factors can affect indexing, from technical issues to content quality. Start by checking Google Search Console for any crawl errors or manual actions. Ensure your sitemap is accurate and submitted through Search Console. Review your robots.txt file to make sure you’re not unintentionally blocking Googlebot. If you’ve recently updated content, request a recrawl through Search Console. Consider using MEGA SEO’s automated tools to help identify and fix technical issues, optimize content, and manage your site’s SEO health.

How can I speed up the indexing process for my website? While you can’t directly control Google’s indexing timeline, you can optimize your site to make it easier for Google to find and index your content. Submit your sitemap through Google Search Console, ensure your site has a clear internal linking structure, create high-quality, original content, and build backlinks from reputable sources. MEGA SEO offers tools to automate many of these tasks, streamlining the process and potentially speeding up indexing.

Does MEGA SEO guarantee faster Google indexing? No tool can guarantee instant or specific indexing times, as Google controls the indexing process. However, MEGA SEO helps optimize your site for better crawlability and higher quality content, which are factors that Google considers when indexing. By using MEGA SEO, you’re improving your site’s overall SEO health and making it more likely to be indexed efficiently.